model = load_model('model.h5', compile = False) 로 컴파일 옵션을 줌

< create_dataset.py >

- 1분 30초동안 녹화될 때의 데이터를 모은다

import cv2

import mediapipe as mp

import numpy as np

import time, os

#actions = ['come', 'away', 'spin']

actions = ['one', 'two', 'three','four','five']

seq_length = 30

secs_for_action = 30

# MediaPipe hands model

mp_hands = mp.solutions.hands

mp_drawing = mp.solutions.drawing_utils

hands = mp_hands.Hands(

max_num_hands=1,

min_detection_confidence=0.5,

min_tracking_confidence=0.5)

cap = cv2.VideoCapture(0)

created_time = int(time.time())

os.makedirs('dataset', exist_ok=True)

while cap.isOpened():

for idx, action in enumerate(actions):

data = []

ret, img = cap.read()

img = cv2.flip(img, 1)

cv2.putText(img, f'Waiting for collecting {action.upper()} action...', org=(10, 30), fontFace=cv2.FONT_HERSHEY_SIMPLEX, fontScale=1, color=(255, 255, 255), thickness=2)

cv2.imshow('img', img)

cv2.waitKey(3000)

start_time = time.time()

while time.time() - start_time < secs_for_action:

ret, img = cap.read()

img = cv2.flip(img, 1)

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

result = hands.process(img)

img = cv2.cvtColor(img, cv2.COLOR_RGB2BGR)

if result.multi_hand_landmarks is not None:

for res in result.multi_hand_landmarks:

joint = np.zeros((21, 4))

for j, lm in enumerate(res.landmark):

joint[j] = [lm.x, lm.y, lm.z, lm.visibility]

# Compute angles between joints

v1 = joint[[0,1,2,3,0,5,6,7,0,9,10,11,0,13,14,15,0,17,18,19], :3] # Parent joint

v2 = joint[[1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20], :3] # Child joint

v = v2 - v1 # [20, 3]

# Normalize v

v = v / np.linalg.norm(v, axis=1)[:, np.newaxis]

# Get angle using arcos of dot product

angle = np.arccos(np.einsum('nt,nt->n',

v[[0,1,2,4,5,6,8,9,10,12,13,14,16,17,18],:],

v[[1,2,3,5,6,7,9,10,11,13,14,15,17,18,19],:])) # [15,]

angle = np.degrees(angle) # Convert radian to degree

angle_label = np.array([angle], dtype=np.float32)

angle_label = np.append(angle_label, idx)

d = np.concatenate([joint.flatten(), angle_label])

data.append(d)

mp_drawing.draw_landmarks(img, res, mp_hands.HAND_CONNECTIONS)

cv2.imshow('img', img)

if cv2.waitKey(1) == ord('q'):

break

data = np.array(data)

print(action, data.shape)

np.save(os.path.join('dataset', f'raw_{action}_{created_time}'), data)

# Create sequence data

full_seq_data = []

for seq in range(len(data) - seq_length):

full_seq_data.append(data[seq:seq + seq_length])

full_seq_data = np.array(full_seq_data)

print(action, full_seq_data.shape)

np.save(os.path.join('dataset', f'seq_{action}_{created_time}'), full_seq_data)

break

< train.py >

import numpy as np

import os

from tensorflow.keras.utils import to_categorical

from sklearn.model_selection import train_test_split

from tensorflow.keras.utils import to_categorical

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import LSTM, Dense

from tensorflow.keras.callbacks import ModelCheckpoint, ReduceLROnPlateau

import matplotlib.pyplot as plt

from sklearn.metrics import multilabel_confusion_matrix

from tensorflow.keras.models import load_model

os.environ['CUDA_VISIBLE_DEVICES'] = '1'

os.environ['TF_FORCE_GPU_ALLOW_GROWTH'] = 'true'

actions = [

'one',

'two',

'three',

'four',

'five'

]

data = np.concatenate([

np.load('dataset/seq_one_1659147525.npy'),

np.load('dataset/seq_two_1659147525.npy'),

np.load('dataset/seq_three_1659147525.npy'),

np.load('dataset/seq_four_1659147525.npy'),

np.load('dataset/seq_five_1659147525.npy')

], axis=0)

print(data.shape)

x_data = data[:, :, :-1]

labels = data[:, 0, -1]

print(x_data.shape)

print(labels.shape)

y_data = to_categorical(labels, num_classes=len(actions))

y_data.shape

x_data = x_data.astype(np.float32)

y_data = y_data.astype(np.float32)

x_train, x_val, y_train, y_val = train_test_split(x_data, y_data, test_size=0.1, random_state=2021)

print(x_train.shape, y_train.shape)

print(x_val.shape, y_val.shape)

y_data = to_categorical(labels, num_classes=len(actions))

print(y_data.shape)

model = Sequential([

LSTM(64, activation='relu', input_shape=x_train.shape[1:3]),

Dense(32, activation='relu'),

Dense(len(actions), activation='softmax')

])

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['acc'])

model.summary()

history = model.fit(

x_train,

y_train,

validation_data=(x_val, y_val),

epochs=200,

callbacks=[

ModelCheckpoint('models/model.h5', monitor='val_acc', verbose=1, save_best_only=True, mode='auto'),

ReduceLROnPlateau(monitor='val_acc', factor=0.5, patience=50, verbose=1, mode='auto')

]

)

fig, loss_ax = plt.subplots(figsize=(16, 10))

acc_ax = loss_ax.twinx()

loss_ax.plot(history.history['loss'], 'y', label='train loss')

loss_ax.plot(history.history['val_loss'], 'r', label='val loss')

loss_ax.set_xlabel('epoch')

loss_ax.set_ylabel('loss')

loss_ax.legend(loc='upper left')

acc_ax.plot(history.history['acc'], 'b', label='train acc')

acc_ax.plot(history.history['val_acc'], 'g', label='val acc')

acc_ax.set_ylabel('accuracy')

acc_ax.legend(loc='upper left')

plt.show()

model = load_model('models/model.h5')

y_pred = model.predict(x_val)

multilabel_confusion_matrix(np.argmax(y_val, axis=1), np.argmax(y_pred, axis=1))

< test.py >

# https://storage.googleapis.com/tensorflow/windows/gpu/tensorflow_gpu-2.6.0-cp37-cp37m-win_amd64.whl

import cv2

import mediapipe as mp

import numpy as np

from tensorflow.keras.models import load_model

from tensorflow.keras.utils import to_categorical

#actions = ['come', 'away', 'spin']

actions = ['one', 'two', 'three','four','five']

seq_length = 30

#model = load_model('models/model2_1.0.h5') # -> come, away, spin

model = load_model('models/model.h5') # -> come, away, spin

# MediaPipe hands model

mp_hands = mp.solutions.hands

mp_drawing = mp.solutions.drawing_utils

hands = mp_hands.Hands(

max_num_hands=1,

min_detection_confidence=0.5,

min_tracking_confidence=0.5)

cap = cv2.VideoCapture(0)

# w = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH))

# h = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT))

# fourcc = cv2.VideoWriter_fourcc('m', 'p', '4', 'v')

# out = cv2.VideoWriter('input.mp4', fourcc, cap.get(cv2.CAP_PROP_FPS), (w, h))

# out2 = cv2.VideoWriter('output.mp4', fourcc, cap.get(cv2.CAP_PROP_FPS), (w, h))

seq = []

action_seq = []

while cap.isOpened():

ret, img = cap.read()

img0 = img.copy()

img = cv2.flip(img, 1)

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

result = hands.process(img)

img = cv2.cvtColor(img, cv2.COLOR_RGB2BGR)

if result.multi_hand_landmarks is not None:

for res in result.multi_hand_landmarks:

joint = np.zeros((21, 4))

for j, lm in enumerate(res.landmark):

joint[j] = [lm.x, lm.y, lm.z, lm.visibility]

# Compute angles between joints

v1 = joint[[0,1,2,3,0,5,6,7,0,9,10,11,0,13,14,15,0,17,18,19], :3] # Parent joint

v2 = joint[[1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20], :3] # Child joint

v = v2 - v1 # [20, 3]

# Normalize v

v = v / np.linalg.norm(v, axis=1)[:, np.newaxis]

# Get angle using arcos of dot product

angle = np.arccos(np.einsum('nt,nt->n',

v[[0,1,2,4,5,6,8,9,10,12,13,14,16,17,18],:],

v[[1,2,3,5,6,7,9,10,11,13,14,15,17,18,19],:])) # [15,]

angle = np.degrees(angle) # Convert radian to degree

d = np.concatenate([joint.flatten(), angle])

seq.append(d)

mp_drawing.draw_landmarks(img, res, mp_hands.HAND_CONNECTIONS)

if len(seq) < seq_length:

continue

input_data = np.expand_dims(np.array(seq[-seq_length:], dtype=np.float32), axis=0)

y_pred = model.predict(input_data).squeeze()

i_pred = int(np.argmax(y_pred))

conf = y_pred[i_pred]

if conf < 0.9:

continue

action = actions[i_pred]

action_seq.append(action)

if len(action_seq) < 3:

continue

this_action = '?'

if action_seq[-1] == action_seq[-2] == action_seq[-3]:

this_action = action

cv2.putText(img, f'{this_action.upper()}', org=(int(res.landmark[0].x * img.shape[1]), int(res.landmark[0].y * img.shape[0] + 20)), fontFace=cv2.FONT_HERSHEY_SIMPLEX, fontScale=1, color=(255, 255, 255), thickness=2)

# out.write(img0)

# out2.write(img)

cv2.imshow('img', img)

if cv2.waitKey(1) == ord('q'):

break

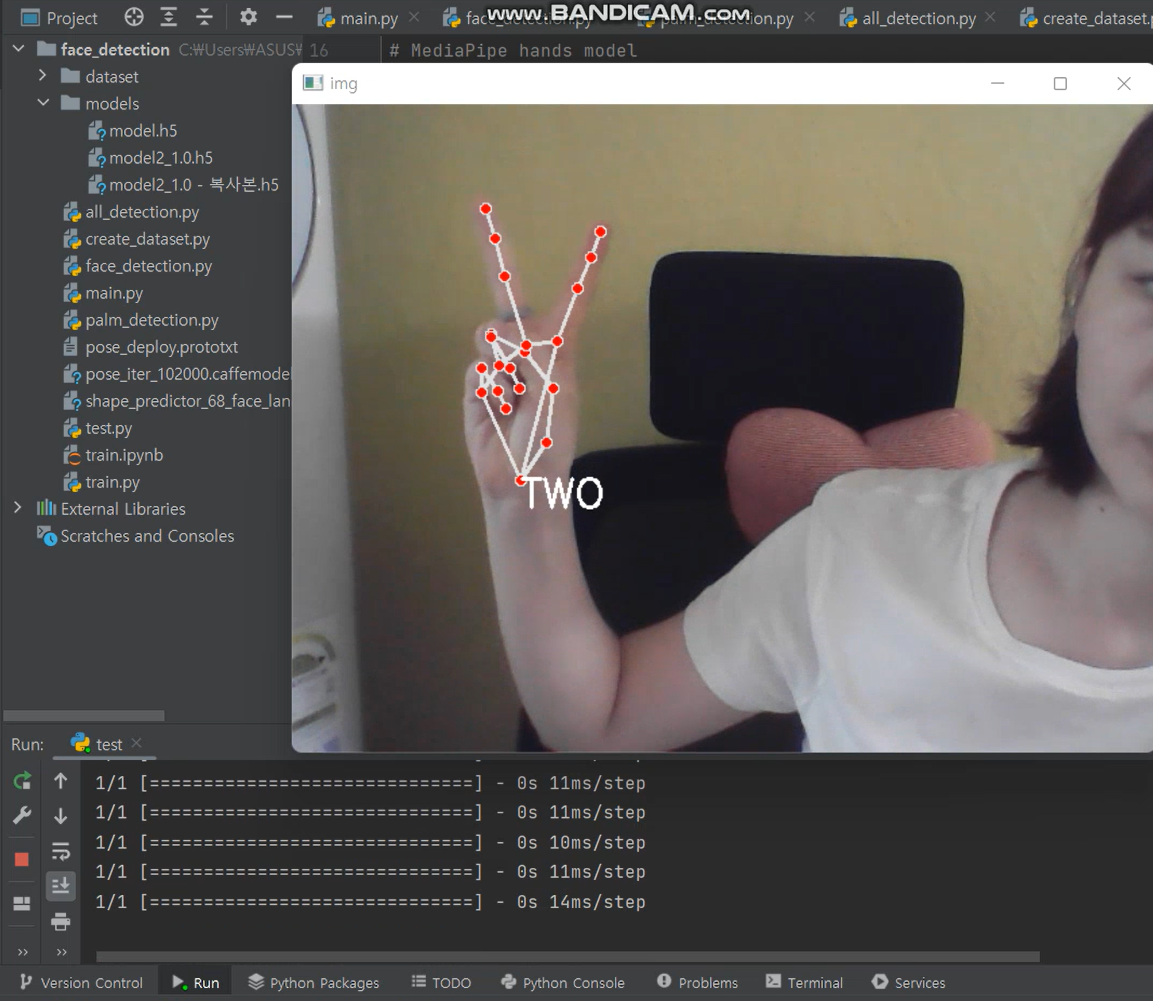

< 결과화면>

'F💻W > Coding' 카테고리의 다른 글

| Atmega로 UART, SPI 통신 구현 (0) | 2023.12.04 |

|---|---|

| ATMEGA UART 출력 (0) | 2023.11.21 |

| gpio mode 참고 (0) | 2023.07.10 |

| Silicon Lab EFR32 코드 분석 (0) | 2023.06.26 |

| [STM32F030C6T6] Bootloader 구현 (0) | 2023.03.30 |

| JSON Encoding/Decoding in C (0) | 2022.10.18 |